What does Alphafold2 mean to us?

Brief histories of protein folding

Long time ago, protein structure prediction used to be dominated by the “true ab initio” method that relies on physically meaningful forcefields.

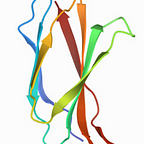

Then, one graduate student (David Baker, now at UW) presented his poster about assembling previously dissected protein structure fragments instead of using these physics forcefields only. A main point is to shuffle protein backbone angles (e.g. phi, psi) until the new assembly has a stable/low energy value (using forcefield which is optimized to best reproduce x-ray crystallography-derived protein structures). This idea which is realized as Rosetta software suite later, reduced sampling space since replacing protein torsion (backbone) angles come from experimentally plausible data (3 and 9 residues long). This method has been working fine with < 100 amino acids long helices. For higher contact order protein (e.g. all beta-sheet), using even longer residues (e.g. strand-loop-strand) tend to fold better.

Then, Sergey Ovchinnikov and Deborah Marks applied well-known “helping” information to reduce sampling space even further. Basically, by just aligning many homologous protein sequences (appendix), we can know which pairs of amino acids are near each other. Therefore, when we predict protein structures, folding runs to satisfy these additional constraints.

Then, 2 years ago, Deepmind reduced sampling space even further for more plausible ones (initial sampling and later refinement with Rosetta force field). Basically, they improved the prediction of protein backbone angle (phi, psi) and distances between amino acids by residual convolutional neural net (residual CNN, reducing the effect of Vanishing Gradient Problem compared to traditional CNN). A similar idea is realized as trRosetta (transform-restrained Rosetta) later that uses deep residual-convolutional network which takes a multiple sequence alignment (MSA) as the input and outputs information on the relative distances and orientations of all residue pairs in the protein. Both Deepmind and Rosetta used multiple sequence alignment to derive this information. However, this tends to focus on modeling local interactions between “relatively near” amino acids. Cropping data that augment training set by thousands fold helped to assess long-range contact, but seems not enough.

In this latest CASP (released Nov. 2020), Deepmind improved accuracy even further. I agree that they (Deepmind) achieved near experimental accuracy for most domains of predictions because the protein loop is intrinsically flexible and certain experimental condition difference (including buffer) tends to change slightly different conformation. Specifically, their result of homology modelling with the closest homologues shows GDT scores around 90–95. These are roughly in the range of 1–1.4 Å rms. Since the 1980s from the work of Chothia & Lesk that even accurately repeated structure determinations of the identical protein tend to disagree around the 0.4–0.8 Å rms (ref. Randy Reed email). Their detailed method will be published soon, but Deepmind appears to use transformer (using attention model, the transformer is mainly used in Deepmind sweeping over CNN and RNN) to further predict distance between large chunks of proteins to overcome previous locality limitation. Another improvement of Alphafold2 compared to “Alphafold1” is end-to-end prediction (e.g. from sequence to 3-D structure) rather than step-by-step predictions (e.g. prediction of distance between distant amino acids, angles between successive amino acids and folding structure based on these information).

In summary, we have observed the drastic reduction of sampling space from all the way Levinthal’s paradox to the recent Deepmind’s breakthrough.

What does this mean?

There have been some pieces of evidence that protein sequence alone dictates protein structure. However, predicting even all-beta sheet protein well by Alphafold2 means that we (humankind) prove/show the first (and “last” as well) “complete” evidence that indeed protein sequence alone governs protein structure.

Recent advancements of AI medical diagnosis and language translator that outperform human experts with respect to speed and accuracy are comparable to this Deepmind’s feat.

As Deepmind alluded as well, the next frontier/target is protein complexes (with DNA, RNA, or small molecules). They wrote that the precise location of amino acid sidechain also needs to be explored. However, with Rosetta function, it is already a solved issue. With known rotamer libraries (e.g. Dunbrack like chi1,2), Rosetta easily captures sidechain conformations. In fact, based on this capability, exploring rotamer combinations for tighter packing is being done. Therefore, as long as backbone angle predictions are accurate enough, sidechain conformations are same/similar between prediction and ground truth (experiment). Of course, if backbone angle predictions are not accurate, superposition between predicted and experimental sidechain structures shows obvious disagreement.

What is the future impact?

More protein structure based studies will be made with predicted structure. Especially the protein design field which is nothing but an inverse protein folding problem will prosper more.

Significant portion of static structure determination by experimental structural biology methods will be shifted to validation/verification purpose because Deepmind predicts well even all-beta sheet proteins and with free template (“ab initio”) method. Therefore, dynamics study from single particle analysis with time resolved cryo electron microscopy (cryo-EM), NMR and molecular dynamics will be more explored. Additionally, other modalities of cryo-EM (e.g. tomography and micro electron diffraction) and room temperautre x-ray crystallography whose data seems not used for Alphafold training will be more pursued.

Therefore, various biomaterial developments will be facilitated.

Other important contributors

Like Wikipedia, researching with collective intelligence is very powerful. That’s why I’m writing this article so that hopefully someone will learn quickly about the basic concept behind this historic achievement and develop even further. Including Deepmind, all researchers learn from each other after all. In that context, I want to mention other contributors behind Deepmind’s success. Brian Kuhlman developed “score12" forcefield. It is typical that centroid (simplified atomic representation) score function (“score3”) tends to be used for protein folding, but his full atom score function profoundly changes whole computational protein design history forever bringing more interest and scientific background for protein structure prediction field as well. CASP participants (including Jens Meiler, 석차옥, Martin Steinegge) also contributed either independently or with Deepmind.

FAQ

Q. Protein structure prediction looks cool, but is there location-dependent effect on protein structure?

A. Yes, protein locations (nuclear, cytoplasm, extracellular) do influence protein structure. For example, reducing environment tends to break disulfide bonds. However, there are many overarching rules that dictate protein folding regardless of protein locations. For example, hydrophobic-philic alternation is often found in beta-sheet proteins and hydrophobic residues tend to be found in the core region of a globular protein. Deepmind team figures out these rules with deep learning.

Q. Although protein structure prediction is becoming more accurate, protein design is another story?

A. Often the final stage of computational “validation” whether the designed protein sequence will fold/function as intended is forward folding (same task as protein structure prediction). This method works since there are many (redundant) sequences that fold for the same structure/similar functions. (of course there is “1” answer/structure for every protein sequence). Therefore, more accurate protein structure prediction leads to more successful protein design as well. Just imagine this situation. We designed a protein sequence that is expected to fold into a certain structure. We used to be not sure, but now since protein structure prediction is accurate enough, we are sure whether our designed sequence will fold as intended/designed or not. It is nice that maybe we do not need to do manual checking of the designed sequence/structure anymore (which is used to be required before).

Appendix

Similar to homology modeling (comparative modeling)’s threshold for E-value for template, there is no clear-cut threshold for number of needed these homologous sequences. But what I heard is that typically we’d better need 100x more number of homologous sequences than length of target protein (amino acid numbers) for reliable prediction.